The relationship between artificial intelligence (AI) and energy consumption is a critical paradox of our time. On one hand, AI is an indispensable tool for accelerating the global clean energy transition, from optimizing smart grids to designing more efficient batteries. On the other, the rapid expansion of generative AI is fueling a surge in energy demand that could undermine our sustainability goals.

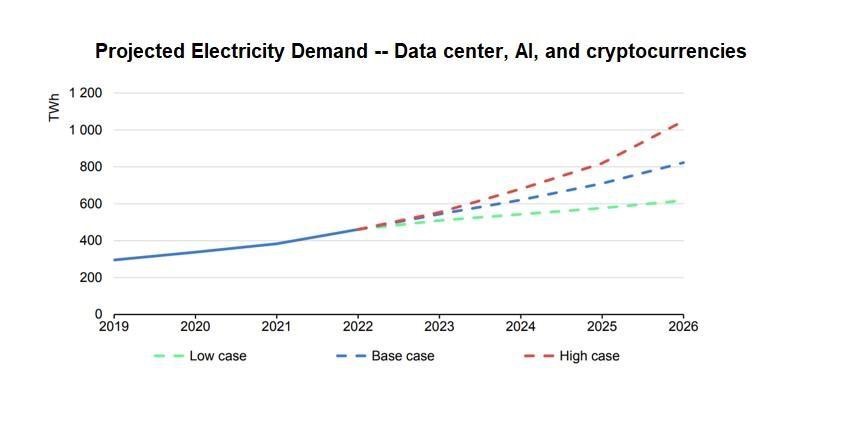

The numbers are stark. Training a single, sophisticated AI model can result in greenhouse gas emissions equivalent to the annual carbon footprint of an average citizen in France. The International Energy Agency has highlighted that AI queries consume significantly more electricity than traditional online searches. As these systems proliferate, the energy demands from the AI sector could match the total electricity usage of entire countries like Sweden or Germany.

Navigating this complexity requires a concerted, multi-pronged effort. We must move beyond simply acknowledging the problem and focus on actionable, innovative solutions that align AI's immense potential with our urgent sustainability imperatives. The future of AI is not just about what it can do, but how it can do it responsibly.

A new strand of AI strategy, notably from China, reframes the competition as a fundamental physics problem: how efficiently can energy be converted into useful intelligence? This "energy-compute theory" suggests that a nation's dominance in AI will be determined by its capacity to maximize inference energy efficiency, not just by its ability to build the biggest models.

This thinking marks a strategic pivot from the "race to AGI" (Artificial General Intelligence) to the pursuit of "ubiquitous edge intelligence," according to Professor Wang Yu Tsinghua University. The goal is to maximize the "intelligence per watt," ensuring that AI becomes highly efficient and accessible for a range of applications, especially those operating at the "edge" like robotics and smart devices.

To achieve this, countries and companies are using robotics and heterogeneous chip stacks as forcing functions to unlock compound gains in efficiency. American firms are also acutely aware of this challenge, as computation uses energy, and energy costs money. For example, OpenAI's GPT-5 uses a "router" to steer simple tasks to smaller, cheaper models. Meanwhile, Google has announced significant progress, reducing the energy consumption of its large language models by a factor of 33 in the past year, with an average Gemini prompt now consuming only 0.24Wh. To put that into perspective, an hour of Netflix on a big screen TV uses as much energy as 400 typical Gemini queries.

The energy-intensive nature of AI is fundamentally a hardware challenge. The training and inference phases of AI models, which are both essential for their operation, demand immense computational power. The selection of the right hardware is a foundational step toward mitigating this environmental impact.

Specialized Processors: While traditional CPUs are versatile, specialized hardware like Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs) are designed for parallel processing, making them far more energy-efficient for AI workloads. Advanced TPUs, for instance, can be up to 30 times more energy-efficient than their predecessors.

Customized Solutions: Tailoring hardware setups to specific AI tasks can yield significant energy savings. For instance, high memory bandwidth may be more beneficial for certain applications, while others might require faster processing cores. Custom-built hardware solutions that co-design AI models with TPUs can maximize performance and energy efficiency, ensuring that software utilizes the full potential of the hardware.

Neuromorphic Computing: The future of energy-efficient AI may lie in emerging technologies like neuromorphic computing. By mimicking the architecture of the human brain, these chips can process information with unprecedented efficiency. Although still in the research phase, this technology holds the promise of a revolution in sustainable AI.

Hardware is only one part of the solution. The way we deploy and operate AI models is equally critical. For too long, the industry has prioritized speed and scale, but a shift toward carbon-aware computing is now underway.

While a significant portion of AI's energy use comes from training large models, the greatest long-term contributor to emissions is model inference, the real-time use of AI. This is where innovation in operational practices is crucial.

Algorithmic Efficiency: New research is exploring techniques like model pruning, early stopping, and transfer learning, which can reduce the computational resources needed during training. For example, some methods have shown up to an 80% reduction in energy consumed during model training without sacrificing performance.

Carbon-Aware Scheduling: AI workloads can be scheduled to run during times when the local electricity grid is powered by low-carbon sources, such as solar or wind power. This strategic scheduling can significantly reduce the carbon footprint of AI operations, even if the total energy consumption remains high.

Renewable Energy Integration: The most sustainable data centers are those that directly leverage renewable energy sources. This includes securing long-term contracts with renewable energy producers or investing in local clean energy projects. This approach aligns energy consumption with sustainability goals and reduces reliance on fossil fuels.

The future of AI is intertwined with the future of our planet. As AI models become more complex and widespread, the challenge of managing their energy footprint will only intensify. This is a problem that no single company or country can solve alone. Researchers, policymakers, and industry leaders must foster a new era of collaboration. This includes establishing more transparent reporting on AI's energy usage, incentivizing the development of energy-efficient hardware and algorithms, and integrating sustainability into national AI strategies. AI has the power to unlock new levels of efficiency and drive the energy transition forward. By developing AI with a core commitment to sustainability, from the algorithms we write to the data centers we build, we can ensure that this technology becomes a powerful force for a greener, more prosperous future.

Photo credits: Getty Images For Unsplash+